Topics

Cluster analysis ✅: Introduction, partition methods, hierarchical methods, density based methods, dealing with large databases, cluster software

Search engines ✅ : Characteristics of Search engines, Search Engine Functionality, Search Engine Architecture, Ranking of web pages, The search engine history, Enterprise Search, Enterprise Search Engine Software.

Cluster Analysis

🚀 Introduction

A cluster is a collection of data objects that are similar to one another within the same cluster and are dissimilar to the objects in other clusters.

The process of grouping a set of physical or abstract objects into classes of similar objects is called clustering.

Clustering Vs Classification

The clustering task does not try to classify, estimate, or predict the value of a target variable like in Classification. Instead, clustering algorithms seek to segment the entire data set into relatively homogeneous subgroups or clusters. By definition, this is an example of unsupervised learning.

🚀 Application

Business: can segment customers into small number of groups for additional analysis and marketing activities

Search Engine Among billions of Web pages, clustering can be used to group these search results into clusters. For instance, a query of “movie” might return Web pages grouped into categories such as reviews, trailers, stars, and theaters.

Climate: Cluster analysis can be applied to find patterns in the atmosphere, pressure, and ocean.

🚀 Partition Methods

Divides the data set of n into k groups, and it must satisfy following requirements :

- Each group must contain at least one object.

- Each object must belong to exactly one group.

K-Mean Clustering

Given a database of n objects, a partitioning method it creates k partitions of the data, where each partition represents a cluster and always k ≤ n.

It creates an initial partitioning, then iteratively allocate objects to cluster.

Algorithm

Input:

k : the number of clusters

D : a data set containing n objects.

Output:

A set of k clusters.

Method:

- Choose

kobjects fromDas the initial cluster centers. - Compute

centroid(mean of a group of points), - Assigning each point to its closest centroid, and recompute the centroid

- Do until all points are covered.

🚀 Hierarchical Methods

A hierarchical clustering method works by grouping data objects into a tree of clusters. Further, classified as agglomerative(bottom-up) or divisive(top-down), depending on whether the hierarchical decomposition is formed in a bottom-up (merging) or top down (splitting) fashion.

A tree-like diagram for graphical representation in hierarchical clusstering is called a dendrogram.

Agglomerative: Starts with individual points as clusters, successively merge the two closest clusters until only one cluster remains. This requires defining a notion of cluster proximity.

Algorithm

- Compute the proximity matrix.

- Merge two closest clusters.

- Update proximity matrix.

Divisive : Start with one, all-inclusive cluster and, at each step, split a cluster until only singleton clusters of individual points remain.

🚀 Density Based Methods

DBSCAN: Density-Based Spatial Clustering of Applications with Noise.

Input : Epsilon(<1.5), Min Pts

Core Point: Have minimum 3 points

Boundary Points : Near to core point, but not a core point

Noise Point : Neither core nor boundary.

Dealing with Large Databases

Cluster Software

Search Engines

🚀 Introduction

Search engines : program that searches for & identifies items in a database that correspond to keywords specified by the user, to find a particular site on the internet.

🚀 Working/Overview

Utilize special programs named “crawler”, that travel along the Web, following links from page to page, and “spiders” use this gathered information to create a searchable index.

Key elements of a web page like page title, description, content and keyword density, decides the ranking (which page should be at first, second, third).

Every search engine uses different algorithms, so are the results on Search Engine Result Page (SERP).

Algorithms continuously updates and are dedicated towards giving users best result.

🚀 Characteristics

Scale : Can handle millions of searches/day and scan billions of docs.

Varied Information types : Sites, Images, Videos, Shopping, Map, News, Weather, Books, Flights, Finance all in one place!

Precise/Best Results : Among billions of sites, it must give the most relevant result to the user.

Fast : Results must load in milliseconds to avoid bounce rates.

Unedited : Quality issues & spam can easily be reported.

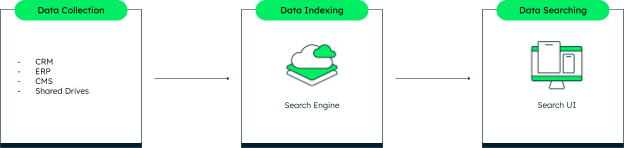

🚀 Architecture

When the index is ready, the searching can be performed through query interface.

A user enters a query into a search engine (typically by using keywords). The application then parses the search request into a form that is consistent to the index.

The engine examines its index and provides a listing of best matching, usually with a short summary containing the document’s title and sometimes parts of the text.

Results are in order based on ranking, which is done according to document relevancy to the query, date updated and popularity among many other factors.

🚀 Functions

Crawling : The crawler, or web spider or bot is a vital software component of the search engine. Looks at all sorts of data such as content, links on a page, broken links, sitemaps, and HTML code validation. To discover new content & update the existing, and it crawls until it cannot find any more information within a site, such as further hyperlinks to internal or external pages. Using sitemaps helps the crawlers. “Robots.txt” helps to schedule crawler, so they don’t visit in maintenance/udpate hours of site.

Crawling is functionality. Crawler is part of search engine.

Indexing : To categorize each piece of content. Once content is crawled, it indexes that content based on the occurrence of keyword phrases in each individual website.

The indexing function of a search engine first excludes common articles such as “the,” “a” and “an.” Thus, stores the content in an organized way for quick and easy access.

Indexing is functionality. Index is part of search engine.

Storage & Results : Store large amounts of data ranging in the terabytes. The most relevant results according to ranking are shown on SERP.

🚀 Ranking

- Security(SSL/HTTPS)

- Domain (Keyword, Authority)

- Site (Title, Description)

- Meta Keywords

- Quality Content(H1, Image(Alt Text, Description), URL)

- Backlinks(Quality Citations)

- Speed(Load Time, Mobile Optimization)

🚀 History

Manual : Earlier search engines were created and maintained manually, by humans. Expensive, Slow, Somewhat Subjective and limited number of sites could be indexed, later it couldn’t possibly keep up expansion in number of sites.

Archie : The First Search Engine, developled for FTP Sites. Later Mathematics and English together used, for Statistical Analysis of Word Relationships and Archietext was introduced.

To measure the active count of web servers & growth of the web, WWWW bot was created. URLs were captured and later this database came to known as Wandex

Bot Based : JumpStation, the World Wide Web Worm, Wandex were two popular bots based search engines. JumpStation gathered title and header from web pages and retrieved these by using a simple linear search. The WWW Worm was great because it indexed both titles and URL’s. Unfortunately, JumpStation and the World Wide Web Worm listed results in the order they found them and if you did not know the exact name of the site you were looking for, you might not ever find it.

Yahoo : It started as a listing of their favourite websites but with each entry, besides the URL, also had a page description. Later got funded.

Web Crawler : First search engine to Index Entire Pages

Lycos : A monstrous new search engine named Lycos went public with a catalog of 54,000 documents. Added Prefix Matching and Word Proximity to Search Results. Its huge size, indexed 60 million documents.

Ask Jeeves : A natural language search engine, using Direct Hit technology, used human editors to try to match search queries.

Teoma Developed Clustering Techniques to Determine Site Popularity, used “clustering” to organize sites by Subject Specific Popularity—they tried to find local web communities in order.

Google : Ability to analyze the “back links” pointing to a given website. BackRub ranked pages using “citation notation”. All links counted as votes, higher the votes higher the counting. The mission is to organize the world’s information and make it universally accessible and useful.

Advanced Filters : Crawling Patterns, Link Analyzation. Google continues to improve. In doing so, they include a myriad of factors to bring the best of the best results.

People are still only willing to look at the first few tens of results. New tools with very high precision (number of relevant documents returned, say in the top tens of results).

🚀 Enterprise Search

Enterprise Search Software Programs for organized retrieval of structured and unstructured data within an organization.

🚀 Enterprise Search Softwares

- ElasticSearch, Elastic Enterprise Search

- Agolia, Yext

- Amazon OpenSearch/ElasticSearch/Kendra Service

- IBM Watson Discovery.

Comments

Post a Comment